热门话题

#

Bonk 生态迷因币展现强韧势头

#

有消息称 Pump.fun 计划 40 亿估值发币,引发市场猜测

#

Solana 新代币发射平台 Boop.Fun 风头正劲

这有点埋了重点,实际上是通过一个小改动,你也可以在具有 N 个中间体的 NxN 矩阵上执行 ReLU(A @ B) @ C,而无需重新计算。

(也就是:一个神经网络。)

8月8日 23:42

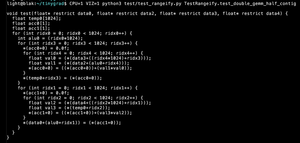

Everyone knows about Flash Attention. But do you know about Flash GEMM?

This code computes (A @ B) @ C on NxN matrices with N intermediates and no recomputation. If you don't use a BLAS library, you don't need to materialize the intermediate matrix.

25.39K

热门

排行

收藏