Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

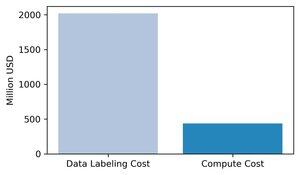

The prevailing wisdom is that compute is the most important factor for frontier AI training. We think this is wrong: data is the most costly and important component of AI training.

We collected estimates of revenue for major data labeling companies and compared them with the marginal compute cost for training top models in 2024. Our estimates show that data labeling is ~3x higher than the marginal training compute.

1/8

Our recent blog post breaks down the true cost of training today’s flagship models with concrete numbers and case studies

The full analysis is on Substack:

2/8

A snapshot of 2024: we calculated the annual revenue of major labeling firms (Scale, Surge, Mercor, Labelbox, …) and compared it with the marginal compute spend for training GPT-4o, Sonnet-3.5, Mistral-Large, Grok-2, and Llama-3-405B. Result: the labeling costs are roughly 3x the marginal compute costs.

3/8

From 2023 to 2024, we find a jump of 88x in the data-labeling industry, while the training compute costs rose only 1.3x. This is a growth rate ~70x faster for data labeling

Note that we don’t expect the trends to continue into 2025 and beyond, as most of the growth is from Mercor, so the growth rates will be lower, even as the total amount of data costs increases

4/8

Beyond revenues, MiniMax-M1 needed <$1M in compute to reach Claude-Opus-4 quality, yet curating an RL dataset with 140k human annotations would cost ~$14M, 28x the training compute

5/8

Similarly, SkyRL-SQL matched GPT-4o on text-to-SQL with just $360 of training compute, but the 600 expert-annotated queries used in post-training cost ~$60K

6/8

We encourage organizations that track inputs to AI to also track human data costs, as we believe this is critical to understanding AI progress

7/8

Co-written with @maxYuxuanZhu

8/8

126,87K

Johtavat

Rankkaus

Suosikit