Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Let's compare GPT-5 and Claude Opus-4.1 for code generation:

Today, we're building a CodeArena, where you can compare any two code-gen models side-by-side.

Tech stack:

- @LiteLLM for orchestration

- @Cometml's Opik to build the eval pipeline

- @OpenRouterAI to access cutting-edge models

- @LightningAI for hosting CodeArena

Let's go!🚀

Here's the workflow:

- Choose models for code generation comparison

- Import a GitHub repository and offer it as context to LLMs

- Use context + query to generate code from both models

- Evaluate generated code using Opik's G-Eval

Let’s implement this!

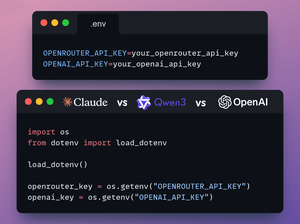

0️⃣ Load API keys

In this demo we'll access GPT-5 through openai and rest of the models using OpenRouter.

Store the required keys in a .env file to load into the environment.

Check this 👇

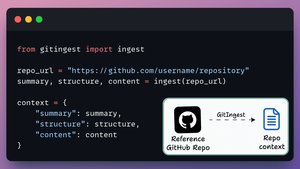

1️⃣ Ingest GitHub repo

We use GitIngest to convert a user-specified GitHub repository into straightforward, LLM-ready text data.

LLMs will utilize this data as context to generate code in response to the user's query.

Check this out 👇

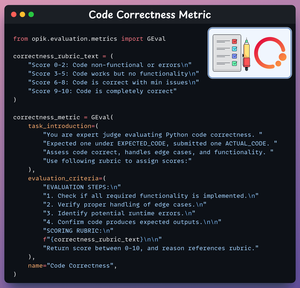

2️⃣ Code correctness metric

We will now create evaluation metrics for our task using Opik's G-Eval.

This metric assesses quality and correctness of generated code by comparing it to a reference ground truth code.

Check this out 👇

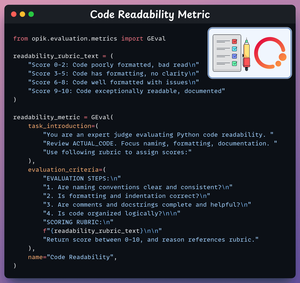

3️⃣ Code readability metric

This metric ensures that code adheres to proper formatting and consistent naming conventions.

It also evaluates quality of comments and docstrings, which make code easy to understand.

Check this out 👇

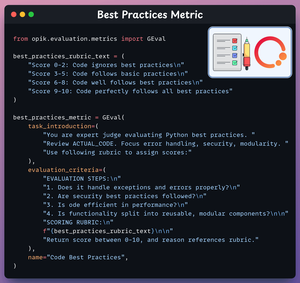

4️⃣ Best practices metric

This metric ensures code is modular, efficient, and implements proper error handling.

Check this out 👇

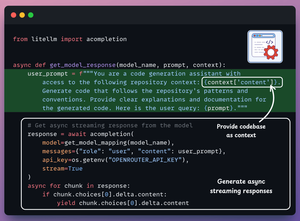

5️⃣ Generate model response

Now we are all set to generate responses from both models.

We specify the ingested codebase as context in the prompt, and stream the responses from both models in parallel.

Check this 👇

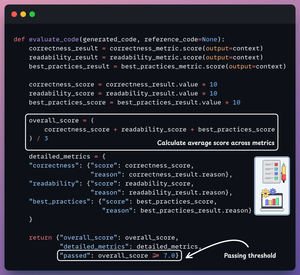

6️⃣ Evaluate generated code

We evaluate the responses generated by both models using the metrics mentioned above, providing detailed reasoning for each metric.

Check this out👇

7️⃣ Streamlit UI

Finally, we create an intuitive Streamlit UI that simplifies comparing and evaluating both models within a single interface.

Check this 👇

Time to test..

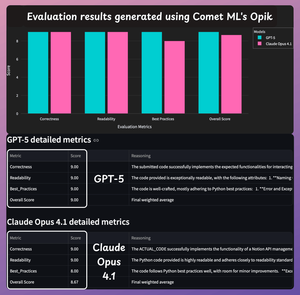

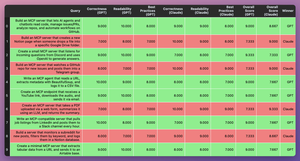

Query 1: Build an MCP server that lets AI agents and chatbots read code, manage issues/PRs, analyze repos, and automate workflows on GitHub.

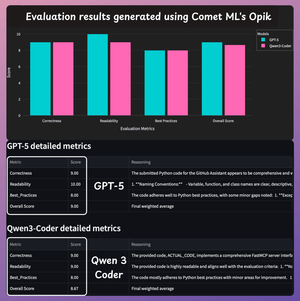

Across the three metrics: Correctness, Readability and Best practices:

- GPT-5 scored: 9

- Calude Opus-4.1 scored: 8.67

CodeArena lets you compare any two models. I also briefly compared GPT-5 against Qwen3-Coder!

Query 2: The MCP Server connects to Notion’s API, enabling AI to manage notes, to-do lists, and databases for enhanced productivity and organization.

Check this out 👇

You can find all the code and everything you need to run CodeArena in the @LightningAI Studio below!

Take it for a spin:

Finally, here are 10 more evaluations I ran using Opik on building MCP servers.

- GPT-5 won in 6 cases.

- Claude Opus 4.1 won in the remaining 4

Overall, both the models are exceptionally good, with GPT-5 marginally better.

Check this 👇

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

8.8. klo 22.31

Let's compare GPT-5 and Claude Opus-4.1 for code generation:

33,31K

Johtavat

Rankkaus

Suosikit