Temas en tendencia

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

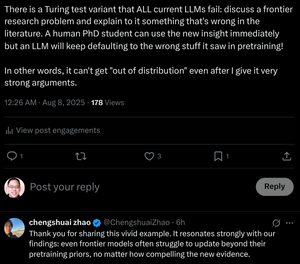

"Incluso los modelos de frontera luchan por actualizarse más allá de los priors de preentrenamiento, sin importar cuán convincente sea la nueva evidencia."

¡Entrenamos a estudiantes de doctorado para hacer esto! ¿Pueden los transformadores hacerlo sin cambiar sus pesos?

8 ago, 07:29

Is Chain-of-Thought Reasoning of LLMs a Mirage?

... Our results reveal that CoT reasoning is a brittle mirage that vanishes when it is pushed beyond training distributions. This work offers a deeper understanding of why and when CoT reasoning fails, emphasizing the ongoing challenge of achieving genuine and generalizable reasoning.

... Our findings reveal that CoT reasoning works effectively when applied to in-distribution or near

in-distribution data but becomes fragile and prone to failure even under moderate distribution shifts.

In some cases, LLMs generate fluent yet logically inconsistent reasoning steps. The results suggest that what appears to be structured reasoning can be a mirage, emerging from memorized or interpolated patterns in the training data rather than logical inference.

... Together, these findings suggest that LLMs are not principled reasoners but rather sophisticated simulators of reasoning-like text.

14.02K

Populares

Ranking

Favoritas