Trending topics

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Louround 🥂

Co-founder of @a1research__ 🀄️ & @steak_studio 🥩

Louround 🥂 reposted

VLAs are still very new and a lot of people find it difficult to understand the difference between VLAs and LLMs.

Here is a deep dive into how these AI systems differ in reasoning, sensing, and action. Part 1.

Let's breakdown the key distinctions and how AI agents wrapped around an LLM differ from operator agents that use VLA models:

1. Sense: How they perceive the world

Agent (LLM): Processes text or structured data e.g JSON, APIs, and sometimes images. It’s like a brain working with clean, abstracted inputs. Think reading a manual or parsing a spreadsheet. Great for structured environments but limited by what’s fed to it.

Operator (VLA): Sees raw, real-time pixels from cameras, plus sensor data (e.g., touch, position) and proprioception (self-awareness of movement). It’s like navigating the world with eyes and senses, thriving in dynamic, messy settings like UIs or physical spaces.

2. Act: How they interact

Agent: Acts by calling functions, tools, or APIs. Imagine it as a manager sending precise instructions like “book a flight via Expedia API.” It’s deliberate but relies on pre-built tools and clear interfaces.

Operator: Executes continuous, low-level actions, like moving a mouse cursor, typing, or controlling robot joints. It’s like a skilled worker directly manipulating the environment, ideal for tasks requiring real-time precision.

3. Control: How they make decisions

Agent: Follows a slow, reflective loop: plan, call a tool, evaluate the result, repeat. It’s token-bound (limited by text processing) and network-bound (waiting for API responses). This makes it methodical but sluggish for real-time tasks.

Operator: Operates, making stepwise decisions in a tight feedback loop. Think of it like a gamer reacting instantly to what’s on screen. This speed enables fluid interaction but demands robust real-time processing.

4. Data to Learn: What fuels their training

Agent: Trained on vast text corpora, instructions, documentation, or RAG (Retrieval-Augmented Generation) datasets. It learns from books, code, or FAQs, excelling at reasoning over structured knowledge.

Operator: Learns from demonstrations (e.g., videos of humans performing tasks), teleoperation logs, or reward signals. It’s like learning by watching and practicing, perfect for tasks where explicit instructions are scarce.

5. Failure Modes: Where they break

Agent: Prone to hallucination (making up answers) or brittle long-horizon plans that fall apart if one step fails. It’s like a strategist who overthinks or misreads the situation.

Operator: Faces covariate shift (when training data doesn’t match real-world conditions) or compounding errors in control (small mistakes snowball). It’s like a driver losing control on an unfamiliar road.

6. Infra: The tech behind them

Agent: Relies on a prompt/router to decide which tools to call, a tool registry for available functions, and memory/RAG for context. It’s a modular setup, like a command center orchestrating tasks.

Operator: Needs video ingestion pipelines, an action server for real-time control, a safety shield to prevent harmful actions, and a replay buffer to store experiences. It’s a high-performance system built for dynamic environments.

7. Where Each Shines: Their sweet spots

Agent: Dominates in workflows with clean APIs (e.g., automating business processes), reasoning over documents (e.g., summarizing reports), or code generation. It’s your go-to for structured, high-level tasks.

Operator: Excels in messy, API-less environments like navigating clunky UIs, controlling robots, or tackling game-like tasks. If it involves real-time interaction with unpredictable systems, VLA is king.

8. Mental Model: Planner + Doer

Think of the LLM Agent as the planner: it breaks complex tasks into clear, logical goals.

The VLA Operator is the doer, executing those goals by directly interacting with pixels or physical systems. A checker (another system or agent) monitors outcomes to ensure success.

$CODEC

21.72K

What if instead of "we have no buyers, that's why we look outwards (DATs)"

We just have a more sophisticated industry not buying bundled vaporware from celebrities anymore?

Which indeed relate to the takeaways of this post but does not mean there are no buyers?

KyleAug 21, 12:46

YZY was a very good litmus test as to where we are as a market

(+) on one hand, it had a nice 2x. Clearly, there is SOME incremental liquidity

(-) proceeded by a sell off. I don't believe this is a "max extract" scenario - but rather lethargy. there is no organic demand willing to step in. the speculative fervor is over

if there is no extraction, nor buy pressure, the base case is just a slow bleed down (similar to PUMP upon launch)

the takeaways are the same as what I've been echoing over the past few weeks - we have no buyers, that's why we look outwards (DATs)

and the simple action to take is either

1) buy tokens that you believe will have buyers bc they are building something good

2) buy tokens that buy themselves

3) buy a different asset class

4) buy tokens that have an outside bid (DAT / tradfi)

5) sell everything else

6) short vaporware

4.28K

Dips in a bull market are meant to be bought, especially on projects with big catalysts

We all know that AI is the narrative of this cycle, started by ai16z and Virtuals last year.

My bet is that the market will focus on more complex and sophisticated technologies such as VLAs, and let me tell you why.

LLMs (Large Language Models) mainly read and write text: they’re great at explaining, planning, and generating instructions, but they don’t by themselves control motors or interact with the physical world (as you may have experienced with chatgpt).

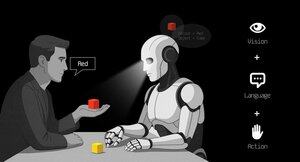

VLAs (Vision Language Action models) differ from LLMs as they are multimodal systems that look at things (vision), understand instructions (language), and directly produce actions. It's like telling a robot to pick up a red cup and then moving its arm to do it.

VLAs are trained on examples that pair images/video + instructions + real action traces (how a robot actually moved), and they must run fast and safely in real time. LLMs on their side are trained on huge text collections and focus on reasoning and language tasks.

TL;DR LLMs think and speak whil VLAs see, reason, and act.

As you can see, VLAs are a major addition to LLMs and will notably enable the next 0 to 1 innovation in the overall economy that will be robotics. A majority of investment funds are allocating a large part of their investments into this sector, seen as the next logical evolution in the AI industry.

I already made a post a while ago on the current leader in the crypto market, @codecopenflow, which did not raise capital (fair launch) yet is shipping cutting-edge products and currently sitting at $23M FDV.

For information, other crypto competitors raised $20m ( @openmind_agi) at what is probably a $200M to $300M ++ FDV while no product or community has been built and shipped yet.

What makes Codec a leading project in the sector is that they tackle a crucial bottleneck in robotics and AI, which is the difficulty to have all the AI tools interact together. Let me explain.

Their latest release, OPTR (operator), is a toolkit that helps build operators capable of interacting on multiple platforms such as robots, desktops, browsers, or simulations. The objective of an operator is to see, reason, and act (VLA) in both digital (computers) and physical (robots) worlds.

This toolkit serves as core infrastructure for robotic teams aiming to test their product and enhance the overall process by providing a unified experience instead of separate ones for web browsers, simulations, or robots. This essentially makes the operator adaptive and autonomous regardless of its environment.

So you get it, it will save big time for companies and developers who previously had to go through each step manually and where you can save time you can save money.

It will also enable Codec to build their own operator projects and launch new capacities relatively fast onto the market, notably through their marketplace.

TL;DR: You probably have seen videos of robots folding tissues, sorting boxes, or jumping on various elements. They have all been trained for this very specific use case, and unfortunately, one skill cannot be re-used in another environment like a human could do. OPTR from Codec solves this by making skills transferrable among environments and situations, making training and development a lot faster and cheaper for enterprises.

This is why Codec is so interesting in unifying the digital world with the physical world.

$CODEC, Coded.

8.46K

Louround 🥂 reposted

Top Crypto Voices to Follow

Here’s Part 2 of my curated list of must-follow Crypto KOLs:

@xerocooleth – Kaito star. Blends yapping with entertainment, uplifts small accounts, and posts non-stop.

@Louround_ – The Researchoor, Buildoor, Influencoor. A true multi-hat legend.

@thelearningpill – Yield connoisseur. Curator of “Gud Reads” and a proud Aptos maxi.

@arndxt_xo – Macro sensei. Substack writoor, connector of dots, enlightens CT daily.

@0xCheeezzyyyy – Yield maxi + infographic wizard. Pendle shilloor with clean visuals.

@eli5_defi – Infographic king. Simplifies DeFi like no other, always backing small accounts.

@theunipcs – From 16k → 13M with BONK. Ran USELESS to $300M+. If memes are your thing, he’s your guide.

@0xAndrewMoh – BNB chain maxi. Writes narrative threads. Owns 11 alts who’ll boost you if you follow him.

@poopmandefi – Name might be poop, but research is premium. Sharp takes on stablecoins, alts, VCs & more.

@rektdiomedes – Macro brain. Curator of @thedailydegenhq, liftoor, health maxi, and champion for small accounts.

@YashasEdu – Writes across DeFi, DeFAI, and L2s. Small for now, but future big-voice in the making.

@0x_Kun – Straight-shooter. Talks trading discipline, money management, and macro without the BS.

Part 3 and 4 will be dropped in the coming weeks, so tay tuned!

36.95K

Protocols I use weekly (including wallets):

@HyperliquidX

@Lighter_xyz

@Rabby_io

@phantom

@DeBankDeFi

@DefiLlama Aggregator

@JupiterExchange

@debridge

@defidotapp

What else?

DeFi WarholAug 15, 20:21

Protocols I use weekly (not including wallets):

@infinex

@0xfluid

@KaitoAI

@pendle_fi

@Infinit_Labs

@DriftProtocol

@HyperliquidX

@UseUniversalX

@babylonlabs_io

@JupiterExchange

What am I missing out on?

6.89K

Shopped more $HYPE on this dip, the rationale is very simple: when volume spikes (caused by pumps or dumps), HL generates a ton of volume, fees, and liquidations, allocated to the buyback.

TL;DR: the house always wins.

Aside from this, it seems that the future of robotics, $CODEC, did not want to dip, so I bought more anyway.

That was a great reset, well needed for markets to go higher, now we carefully watch the rest of August 🤝

Edgy - The DeFi Edge 🗡️Aug 15, 10:16

Looks like just a dip to me, I’m expecting higher prices ahead. Time to go shopping. Which alts are on your radar right now?

2.82K

Top

Ranking

Favorites

Trending onchain

Trending on X

Recent top fundings

Most notable